In Part I, I started out focusing on truck drivers, because their

vulnerability is easy to understand. But I want to make it clear: even “high-skill”

or “high education” professions aren’t much better. Even doctors

and lawyers

aren’t safe. That noise you’re hearing is the sound of a million Jewish

grandmothers spinning in their graves.

All humor aside, Artificial Intelligence is improving fast.

If anything, our 47% number from earlier is probably a low estimate. Even

assuming that a third of that 47% are able to retrain and secure new employment (a

big assumption), we’re still looking at almost half the population (~31% + the

current unemployment rate of 9%) being indefinitely or permanently unemployed, with the other half constantly

looking over their shoulders for the next advancement to put them out of work.

The fact is, as AI get smarter and smarter, the number of humans who can do

work in any field that is better than the work of computers

approaches zero.

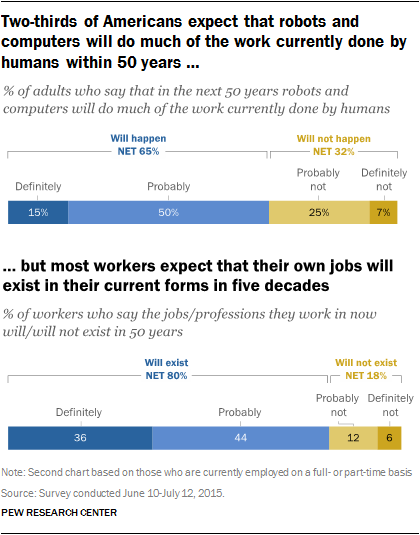

Not that people are aware of it, but then again, humans are

notoriously (and consistently) bad at figuring out the future. Much ink

has been spilled,

trying to overcome implicit human biases. But whether you take our basic optimism personally

or not, people consistently and provably do not recognize that future trends

will inconvenience them as well.

My personal response to seeing data like this is to get

angry, and rail at people for being stupid. But overcoming our innate cognitive

biases in any consistent way is really, really hard, and insulting people doesn’t

actually accomplish anything other than getting people to think that you’re a

kind of evil Vulcan. Also, my unrealistic expectations otherwise demonstrates the

same cognitive fallacy; no one is immune. This is part of why I can never get away from cynicism as practical life

advice.

Regardless, it’s not difficult to see that this future I’ve

sketched out is unsustainable. You can’t leave half the population bored

and poor and expect that things will just work themselves out. People hunger

for purpose and work, and if they can’t find it in society, they’ll find it in

cults, religions, and demagogues. To expect otherwise is foolish; our optimistic

friends will tear it all down before they’ll accept nihilism.

Unusually for this blog, I actually may have part of the

solution to one of the problems I’m outlining. Specifically, part of the answer

for the problem of AI displacing human workers.

Now, I want to make it clear that this entire thing isn’t

some Randian

nonsense critique. I might not have made it clear enough earlier, but I

strongly believe that human lives have meaning and “worth” beyond the simple

monetary calculus of our economic contributions. And while I admit that I’ve

just outlined a very “John Galt”

scenario, with only a small fraction of people able to meaningfully contribute

to the functioning of society, I will not further dignify this

perspective. It is no fault of the majority of humanity that they are not able

to exceed the capabilities of an AI, and until the transhumanists

get their act together, humans are going to keep losing

to Artificial Intelligence.

So, given that there will soon be not enough work for a huge

percentage of people (and therefore not enough income for them to buy food,

housing, or anything else our collective economic machine needs to function), a

new economic paradigm is needed: Guaranteed Minimum Income

(GMI). Or a Basic Income (BI), there’s not really a ton of difference, it’s

more about the implementation.

Assuming you’re not already familiar with it, GMI/BI is just

socialism and safety nets at their finest. Instead of Medicare/social

security/food stamps/any other program, every citizen is paid a living wage by

the government, with no strings or limitations. If done right, this will hopefully

free mankind from the drudgery of boring toil, ensuring that everyone has the

chance to find fulfilling work, or the choice not to.

This is obviously a controversial

position,

with unexplored long term societal consequences. A system like GMI/BI could easily

destroy self-reliance and the will to work on a societal scale; it’s hard to

want to do anything if you don’t have to, and a computer is already doing it

better than you can. If you want a vision of the future, picture a child asking

google for the answer — forever. Technological dependency is already here, and

will only get worse as generations grow up without ever realizing things could

be different.

|

| Still from WALL·E, 2008 |

But even if GMI/BI does have all those negative side

effects, it’s probably still necessary. The alternative is both unconscionable

and unsustainable; unless the rich plan to retreat to safety

while the rest tear each other apart (hard to do reliably), we need to

prioritize solutions that are good for everyone. Even if (and likely when) we

no longer live in a democracy, policies that completely disregard the general

welfare will still have negative consequences.

I’ve rambled a bit, but my main point is

fairly simple: in a world where humans no longer reliably control the means of production because the labor of humanity is economically negligible, a more egalitarian

way of sharing societal benefits is necessary.

Write about how safety is an illusion

ReplyDeleteEducation needs to change. We need to think more about what people will do. Back to the Farm? Machines will do that too better than people can. A service economy? No money for wars, we will need the funds for feeding the people. How then will we have population control??

ReplyDelete